Introduction

Web Scraping is programmatically extracting information from online sources. It’s useful for several tasks - gathering data for analysis, articles, data for for Machine/Deep Learning.

Basics

You can broadly split it into two parts. The first is getting the contents of the webpage and the second is to parse them. We can use a GET request to extract the

HTML of the page using a library like requests and parsing it with BeautifulSoup.

First, let’s download the libraries we are going to use (Install requests, BeautifulSoup and Pandas):

pip install requests pandas beautifulsoup

Make a request:

import requests

url = "https://in.reuters.com/"

data = requests.get(url)

print(data.content)

This will print all the content, from that URL.

Parse the request

from bs4 import BeautifulSoup

import requests

url = "https://in.reuters.com/"

data = requests.get(url)

soup = BeautifulSoup(data.content, 'html.parser') #Converting the raw data into a parsable form.

print(soup.find_all('div',class_= "edition"))

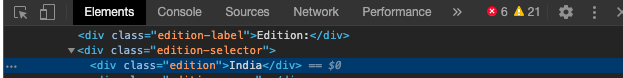

The above code retrieves the data from the website, converts it into a parsable form and extracts the edition we are currently viewing. This is done using the find_all command and passing

in the HTML tag (in this case <div>) and the class name (edition).

But how did we come to know the exact tag and class name? For this, we can get this by inspecting the website. Find here how to do it on different browsers.

Clicking on the text we want, we can get the HTML of it.

Nice, we have all the basic building blocks to start scrapping!

Building a real scrapper.

First, import the libraries:

import requests

import pandas

from bs4 import BeautifulSoup

For a simple scraper, let’s focus only on one article. Let’s retrieve the title, content and time.

url = "https://in.reuters.com/article/apple-carbon/apple-to-remove-carbon-from-supply-chain-products-by-2030-idINKCN24M1LM"

headers = {'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36'}

data = requests.get(url = url, headers = headers)

soup = BeautifulSoup(data.content, 'html.parser')

We know everything here, except headers. Headers are additional information we pass on along with the HTTP request. This is especially useful as most websites

ban bots running on servers. You can find the User-Agent of your browser here.

Now that we have the data in a parsable form, lets extract the relevant information.

headline = soup.find('h1',class_= "ArticleHeader_headline").get_text()

body = soup.find('div',class_= "StandardArticleBody_body").get_text()

date_and_time = soup.find('div',class_= "ArticleHeader_date").get_text()

We use the get_text() attribute of BeautifulSoup to get only the text (and not the accompanying HTML tags).

Finally, we can save the data in the form of a .CSV using the pandas library.

df_dic = {"Headline" : headline, "Content" : body, "Data/Time" : date_and_time}

df = pd.DataFrame(df_dic, index = [0])

df.to_csv('Reuters.csv')

Here we first create a dictionary containing all of the values, convert it into a pandas DataFrame and save it to a .csv.

Congratulations! You have built your first web scrapper Check out part 2 for a more advanced and complete scrapper.

Complete Code:

from bs4 import BeautifulSoup

import pandas as pd

import requests

url = "https://in.reuters.com/article/apple-carbon/apple-to-remove-carbon-from-supply-chain-products-by-2030-idINKCN24M1LM"

headers = {'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36'}

data = requests.get(url = url, headers = headers)

soup = BeautifulSoup(data.content, 'html.parser')

headline = soup.find('h1',class_= "ArticleHeader_headline").get_text()

body = soup.find('div',class_= "StandardArticleBody_body").get_text()

date_and_time = soup.find('div',class_= "ArticleHeader_date").get_text()

df_dic = {"Headline" : headline, "Content" : body, "Data/Time" : date_and_time}

df = pd.DataFrame(df_dic, index = [0])

df.to_csv('Reuters.csv')